Exported source

import math,torch,matplotlib.pyplot as pltimport fastcore.all as fcfrom collections.abc import Mappingfrom operator import attrgetterfrom functools import partialfrom copy import copyfrom torch import optimimport torch.nn.functional as Ffrom fastAIcourse.conv import * from fastprogress import progress_bar,master_bar

Exported source

import matplotlib as mplimport torchvision.transforms.functional as TFfrom contextlib import contextmanagerfrom torch import nn,tensorfrom datasets import load_dataset,load_dataset_builderfrom fastAIcourse.datasets import * from fastAIcourse.conv import * import loggingfrom fastcore.test import test_close

= 2 , linewidth= 140 , sci_mode= False )1 )'image.cmap' ] = 'gray'

= 'image' ,'label' = "fashion_mnist" = load_dataset(name)

@inplace def transformi(b): b[x] = [torch.flatten(TF.to_tensor(o)) for o in b[x]]

= 1024 = dsd.with_transform(transformi)

= DataLoaders.from_dd(tds, bs, num_workers= 8 )= dls.train= next (iter (dt))10 ]

(torch.Size([1024, 784]), tensor([5, 4, 9, 4, 3, 0, 6, 5, 7, 6]))

Exported source

class Learner:def __init__ (self , model, dls, loss_func, lr, opt_func= optim.SGD): fc.store_attr()def fit(self , n_epochs):self .accs,self .losses,self .ns = [],[],[]self .model.to(def_device)self .opt = self .opt_func(self .model.parameters(), self .lr)self .n_epochs = n_epochsfor self .epoch in range (n_epochs):self .one_epoch(True )with torch.no_grad(): self .one_epoch(False )def one_epoch(self , train):self .model.training = train= self .dls.train if train else self .dls.validfor self .num,self .batch in enumerate (dl): self .one_batch()= sum (self .ns)print (self .epoch, self .model.training, sum (self .losses).item()/ n, sum (self .accs).item()/ n)def one_batch(self ):self .xb,self .yb = to_device(self .batch)self .preds = self .model(self .xb)self .loss = self .loss_func(self .preds, self .yb)if self .model.training:self .loss.backward()self .opt.step()self .opt.zero_grad()with torch.no_grad(): self .calc_stats()def calc_stats(self ):= (self .preds.argmax(dim= 1 )== self .yb).float ().sum ()self .accs.append(acc)= len (self .xb)self .losses.append(self .loss* n)self .ns.append(n)

= 28 * 28 ,50 = nn.Sequential(nn.Linear(m,nh), nn.ReLU(), nn.Linear(nh,10 ))

= Learner(model, dls, F.cross_entropy, lr= 0.2 )1 )

0 True 1.1753037760416667 0.5987

0 False 1.1203111607142857 0.6135857142857143

Basic Callbacks Learner

Exported source

class CancelFitException(Exception ): pass class CancelBatchException(Exception ): pass class CancelEpochException(Exception ): pass

Exported source

class Callback(): order = 0

Exported source

def run_cbs(cbs, method_nm, learn= None ):for cb in sorted (cbs, key= attrgetter('order' )):= getattr (cb, method_nm, None )if method is not None : method(learn)

Exported source

class CompletionCB(Callback):def before_fit(self , learn): self .count = 0 def after_batch(self , learn): self .count += 1 def after_fit(self , learn): print (f'Completed { self . count} batches' )

= [CompletionCB()]'before_fit' )'after_batch' )'after_fit' )

Exported source

class Learner():def __init__ (self , model, dls, loss_func, lr, cbs, opt_func= optim.SGD): fc.store_attr()def one_batch(self ):self .preds = self .model(self .batch[0 ])self .loss = self .loss_func(self .preds, self .batch[1 ])if self .model.training:self .loss.backward()self .opt.step()self .opt.zero_grad()def one_epoch(self , train):self .model.train(train)self .dl = self .dls.train if train else self .dls.validtry :self .callback('before_epoch' )for self .iter ,self .batch in enumerate (self .dl):try :self .callback('before_batch' )self .one_batch()self .callback('after_batch' )except CancelBatchException: pass self .callback('after_epoch' )except CancelEpochException: pass def fit(self , n_epochs):self .n_epochs = n_epochsself .epochs = range (n_epochs)self .opt = self .opt_func(self .model.parameters(), self .lr)try :self .callback('before_fit' )for self .epoch in self .epochs:self .one_epoch(True )self .one_epoch(False )self .callback('after_fit' )except CancelFitException: pass def callback(self , method_nm): run_cbs(self .cbs, method_nm, self )

Exported source

def get_model(): return nn.Sequential(nn.Linear(m,nh), nn.ReLU(), nn.Linear(nh,10 ))

= get_model()= Learner(model, dls, F.cross_entropy, lr= 0.2 , cbs= [CompletionCB()])1 )

Exported source

class SingleBatchCB(Callback):= 1 def after_batch(self , learn): raise CancelFitException()

= Learner(get_model(), dls, F.cross_entropy, lr= 0.2 , cbs= [SingleBatchCB(), CompletionCB()])1 )

Metrics

Exported source

class Metric:def __init__ (self ): self .reset()def reset(self ): self .vals,self .ns = [],[]def add(self , inp, targ= None , n= 1 ):self .last = self .calc(inp, targ)self .vals.append(self .last)self .ns.append(n)@property def value(self ):= tensor(self .ns)return (tensor(self .vals)* ns).sum ()/ ns.sum ()def calc(self , inps, targs): return inps

Exported source

class Accuracy(Metric):def calc(self , inps, targs): return (inps== targs).float ().mean()

= Accuracy()0 , 1 , 2 , 0 , 1 , 2 ]), tensor([0 , 1 , 1 , 2 , 1 , 0 ]))1 , 1 , 2 , 0 , 1 ]), tensor([0 , 1 , 1 , 2 , 1 ]))

= Metric()0.6 , n= 32 )0.9 , n= 2 )round ((0.6 * 32 + 0.9 * 2 )/ (32 + 2 ), 2 )

Some callbacks

pip install torcheval

Exported source

from torcheval.metrics import MulticlassAccuracy,Mean

= MulticlassAccuracy()0 , 2 , 1 , 3 ]), tensor([0 , 1 , 2 , 3 ]))

Exported source

def to_cpu(x):if isinstance (x, Mapping): return {k:to_cpu(v) for k,v in x.items()}if isinstance (x, list ): return [to_cpu(o) for o in x]if isinstance (x, tuple ): return tuple (to_cpu(list (x)))= x.detach().cpu()return res.float () if res.dtype== torch.float16 else res

Exported source

class MetricsCB(Callback):def __init__ (self , * ms, ** metrics):for o in ms: metrics[type (o).__name__ ] = oself .metrics = metricsself .all_metrics = copy(metrics)self .all_metrics['loss' ] = self .loss = Mean()def _log(self , d): print (d)def before_fit(self , learn): learn.metrics = self def before_epoch(self , learn): [o.reset() for o in self .all_metrics.values()]def after_epoch(self , learn):= {k:f' { v. compute():.3f} ' for k,v in self .all_metrics.items()}'epoch' ] = learn.epoch'train' ] = 'train' if learn.model.training else 'eval' self ._log(log)def after_batch(self , learn):* _ = to_cpu(learn.batch)for m in self .metrics.values(): m.update(to_cpu(learn.preds), y)self .loss.update(to_cpu(learn.loss), weight= len (x))

Exported source

class DeviceCB(Callback):def __init__ (self , device= def_device): fc.store_attr()def before_fit(self , learn):if hasattr (learn.model, 'to' ): learn.model.to(self .device)def before_batch(self , learn): learn.batch = to_device(learn.batch, device= self .device)

= get_model()= MetricsCB(accuracy= MulticlassAccuracy())= Learner(model, dls, F.cross_entropy, lr= 0.2 , cbs= [DeviceCB(), metrics])5 )

{'accuracy': '0.602', 'loss': '1.183', 'epoch': 0, 'train': 'train'}

{'accuracy': '0.700', 'loss': '0.847', 'epoch': 0, 'train': 'eval'}

{'accuracy': '0.733', 'loss': '0.738', 'epoch': 1, 'train': 'train'}

{'accuracy': '0.772', 'loss': '0.646', 'epoch': 1, 'train': 'eval'}

{'accuracy': '0.773', 'loss': '0.631', 'epoch': 2, 'train': 'train'}

{'accuracy': '0.786', 'loss': '0.604', 'epoch': 2, 'train': 'eval'}

{'accuracy': '0.797', 'loss': '0.574', 'epoch': 3, 'train': 'train'}

{'accuracy': '0.801', 'loss': '0.562', 'epoch': 3, 'train': 'eval'}

{'accuracy': '0.809', 'loss': '0.539', 'epoch': 4, 'train': 'train'}

{'accuracy': '0.795', 'loss': '0.558', 'epoch': 4, 'train': 'eval'}

Flexible learner

Exported source

class Learner():def __init__ (self , model, dls= (0 ,), loss_func= F.mse_loss, lr= 0.1 , cbs= None , opt_func= optim.SGD):= fc.L(cbs)@contextmanager def cb_ctx(self , nm):try :self .callback(f'before_ { nm} ' )yield self .callback(f'after_ { nm} ' )except globals ()[f'Cancel { nm. title()} Exception' ]: pass finally : self .callback(f'cleanup_ { nm} ' )def one_epoch(self , train):self .model.train(train)self .dl = self .dls.train if train else self .dls.validwith self .cb_ctx('epoch' ):for self .iter ,self .batch in enumerate (self .dl):with self .cb_ctx('batch' ):self .predict()self .get_loss()if self .training:self .backward()self .step()self .zero_grad()def fit(self , n_epochs= 1 , train= True , valid= True , cbs= None , lr= None ):= fc.L(cbs)# `add_cb` and `rm_cb` were added in lesson 18 for cb in cbs: self .cbs.append(cb)try :self .n_epochs = n_epochsself .epochs = range (n_epochs)self .opt = self .opt_func(self .model.parameters(), self .lr if lr is None else lr)with self .cb_ctx('fit' ):for self .epoch in self .epochs:if train: self .one_epoch(True )if valid: torch.no_grad()(self .one_epoch)(False )finally :for cb in cbs: self .cbs.remove(cb)def __getattr__ (self , name):if name in ('predict' ,'get_loss' ,'backward' ,'step' ,'zero_grad' ): return partial(self .callback, name)raise AttributeError (name)def callback(self , method_nm): run_cbs(self .cbs, method_nm, self )@property def training(self ): return self .model.training

Exported source

class TrainCB(Callback):def __init__ (self , n_inp= 1 ): self .n_inp = n_inpdef predict(self , learn): learn.preds = learn.model(* learn.batch[:self .n_inp])def get_loss(self , learn): learn.loss = learn.loss_func(learn.preds, * learn.batch[self .n_inp:])def backward(self , learn): learn.loss.backward()def step(self , learn): learn.opt.step()def zero_grad(self , learn): learn.opt.zero_grad()

NB: I added self.n_inp after the lesson. This allows us to train models with more than one input or output.

Exported source

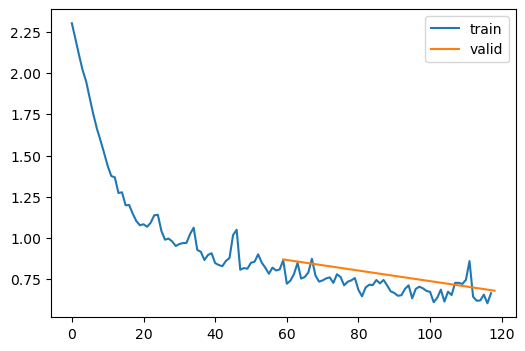

class ProgressCB(Callback):= MetricsCB.order+ 1 def __init__ (self , plot= False ): self .plot = plotdef before_fit(self , learn):= self .mbar = master_bar(learn.epochs)self .first = True if hasattr (learn, 'metrics' ): learn.metrics._log = self ._logself .losses = []self .val_losses = []def _log(self , d):if self .first:self .mbar.write(list (d), table= True )self .first = False self .mbar.write(list (d.values()), table= True )def before_epoch(self , learn): learn.dl = progress_bar(learn.dl, leave= False , parent= self .mbar)def after_batch(self , learn):= f' { learn. loss:.3f} ' if self .plot and hasattr (learn, 'metrics' ) and learn.training:self .losses.append(learn.loss.item())if self .val_losses: self .mbar.update_graph([[fc.L.range (self .losses), self .losses],[fc.L.range (learn.epoch).map (lambda x: (x+ 1 )* len (learn.dls.train)), self .val_losses]])def after_epoch(self , learn): if not learn.training:if self .plot and hasattr (learn, 'metrics' ): self .val_losses.append(learn.metrics.all_metrics['loss' ].compute())self .mbar.update_graph([[fc.L.range (self .losses), self .losses],[fc.L.range (learn.epoch+ 1 ).map (lambda x: (x+ 1 )* len (learn.dls.train)), self .val_losses]])

NB: Added validation loss plotting after the lesson.

= MetricsCB(accuracy= MulticlassAccuracy())= [TrainCB(), DeviceCB(), metrics, ProgressCB(plot= True )]= Learner(model, dls, F.cross_entropy, lr= 0.2 , cbs= cbs)2 )

0.620

1.149

0

train

0.704

0.870

0

eval

0.741

0.714

1

train

0.742

0.681

1

eval

Updated versions since the lesson

After the lesson we noticed that contextlib.context_manager has a surprising “feature” which doesn’t let us raise an exception before the yield. Therefore we’ve replaced the context manager with a decorator in this updated version of Learnerone_epoch().

Exported source

class with_cbs:def __init__ (self , nm): self .nm = nmdef __call__ (self , f):def _f(o, * args, ** kwargs):try :f'before_ { self . nm} ' )* args, ** kwargs)f'after_ { self . nm} ' )except globals ()[f'Cancel { self . nm. title()} Exception' ]: pass finally : o.callback(f'cleanup_ { self . nm} ' )return _f

Exported source

class Learner():def __init__ (self , model, dls= (0 ,), loss_func= F.mse_loss, lr= 0.1 , cbs= None , opt_func= optim.SGD):= fc.L(cbs)@with_cbs ('batch' )def _one_batch(self ):self .predict()self .callback('after_predict' )self .get_loss()self .callback('after_loss' )if self .training:self .backward()self .callback('after_backward' )self .step()self .callback('after_step' )self .zero_grad()@with_cbs ('epoch' )def _one_epoch(self ):for self .iter ,self .batch in enumerate (self .dl): self ._one_batch()def one_epoch(self , training):self .model.train(training)self .dl = self .dls.train if training else self .dls.validself ._one_epoch()@with_cbs ('fit' )def _fit(self , train, valid):for self .epoch in self .epochs:if train: self .one_epoch(True )if valid: torch.no_grad()(self .one_epoch)(False )def fit(self , n_epochs= 1 , train= True , valid= True , cbs= None , lr= None ):= fc.L(cbs)# `add_cb` and `rm_cb` were added in lesson 18 for cb in cbs: self .cbs.append(cb)try :self .n_epochs = n_epochsself .epochs = range (n_epochs)if lr is None : lr = self .lrif self .opt_func: self .opt = self .opt_func(self .model.parameters(), lr)self ._fit(train, valid)finally :for cb in cbs: self .cbs.remove(cb)def __getattr__ (self , name):if name in ('predict' ,'get_loss' ,'backward' ,'step' ,'zero_grad' ): return partial(self .callback, name)raise AttributeError (name)def callback(self , method_nm): run_cbs(self .cbs, method_nm, self )@property def training(self ): return self .model.training

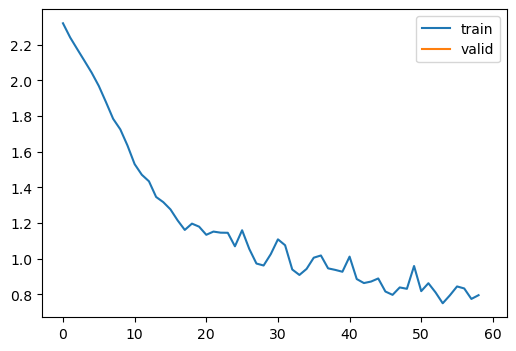

= get_model()= MetricsCB(accuracy= MulticlassAccuracy())= [TrainCB(), DeviceCB(), metrics, ProgressCB(plot= True )]= Learner(model, dls, F.cross_entropy, lr= 0.2 , cbs= cbs)1 )

0.617

1.184

0

train

0.690

0.882

0

eval

TrainLearner and MomentumLearner

Exported source

class TrainLearner(Learner):def predict(self ): self .preds = self .model(self .batch[0 ])def get_loss(self ): self .loss = self .loss_func(self .preds, self .batch[1 ])def backward(self ): self .loss.backward()def step(self ): self .opt.step()def zero_grad(self ): self .opt.zero_grad()

Exported source

class MomentumLearner(TrainLearner):def __init__ (self , model, dls, loss_func, lr= None , cbs= None , opt_func= optim.SGD, mom= 0.85 ):self .mom = momsuper ().__init__ (model, dls, loss_func, lr, cbs, opt_func)def zero_grad(self ):with torch.no_grad():for p in self .model.parameters(): p.grad *= self .mom

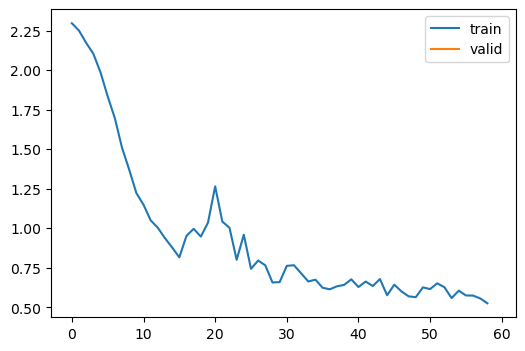

# NB: No TrainCB = MetricsCB(accuracy= MulticlassAccuracy())= [DeviceCB(), metrics, ProgressCB(plot= True )]= MomentumLearner(get_model(), dls, F.cross_entropy, lr= 0.1 , cbs= cbs)1 )

0.682

0.938

0

train

0.797

0.576

0

eval

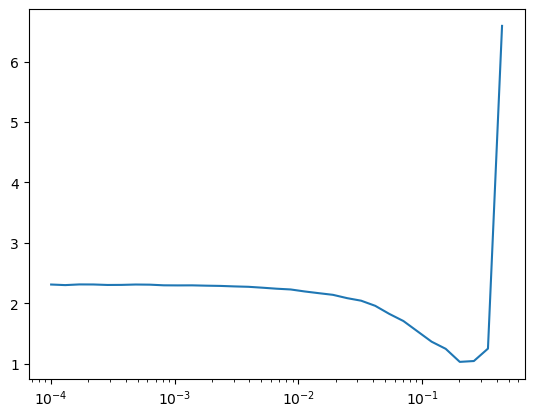

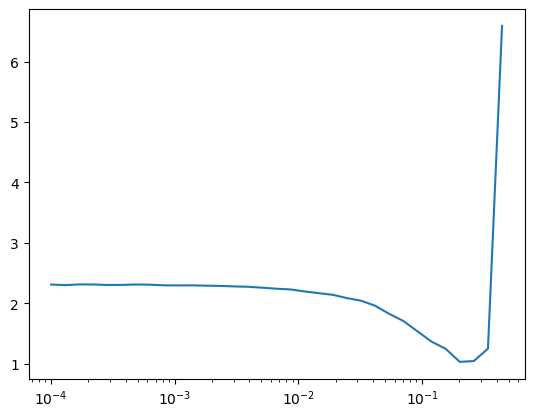

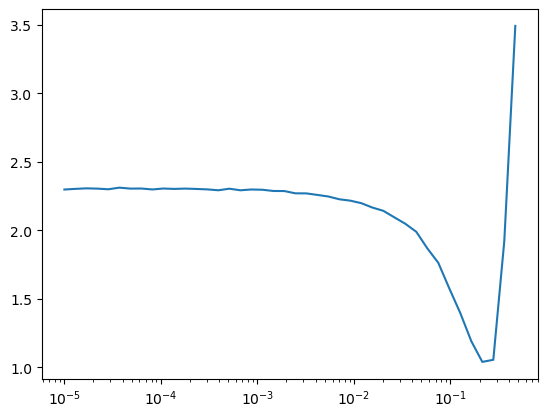

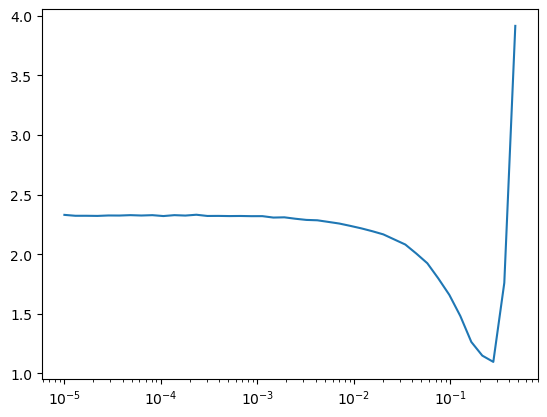

LRFinderCB

Exported source

class LRFinderCB(Callback):def __init__ (self , lr_mult= 1.3 ): fc.store_attr()def before_fit(self , learn):self .lrs,self .losses = [],[]self .min = math.infdef after_batch(self , learn):if not learn.training: raise CancelEpochException()self .lrs.append(learn.opt.param_groups[0 ]['lr' ])= to_cpu(learn.loss)self .losses.append(loss)if loss < self .min : self .min = lossif loss > self .min * 3 : raise CancelFitException()for g in learn.opt.param_groups: g['lr' ] *= self .lr_multdef plot(self ):self .lrs, self .losses)'log' )

= LRFinderCB()= [DeviceCB(), lrfind]= MomentumLearner(get_model(), dls, F.cross_entropy, lr= 1e-4 , cbs= cbs)1 )'log' )

Exported source

from torch.optim.lr_scheduler import ExponentialLR

ExponentialLR

Exported source

class LRFinderCB(Callback):def __init__ (self , gamma= 1.3 , max_mult= 3 ): fc.store_attr()def before_fit(self , learn):self .sched = ExponentialLR(learn.opt, self .gamma)self .lrs,self .losses = [],[]self .min = math.infdef after_batch(self , learn):if not learn.training: raise CancelEpochException()self .lrs.append(learn.opt.param_groups[0 ]['lr' ])= to_cpu(learn.loss)self .losses.append(loss)if loss < self .min : self .min = lossif math.isnan(loss) or (loss > self .min * self .max_mult):raise CancelFitException()self .sched.step()def cleanup_fit(self , learn):self .lrs, self .losses)'log' )

= [DeviceCB()]= MomentumLearner(get_model(), dls, F.cross_entropy, lr= 1e-5 , cbs= cbs)3 , cbs= LRFinderCB())

Exported source

@fc.patch def lr_find(self :Learner, gamma= 1.3 , max_mult= 3 , start_lr= 1e-5 , max_epochs= 10 ):self .fit(max_epochs, lr= start_lr, cbs= LRFinderCB(gamma= gamma, max_mult= max_mult))

lr_findLRFinderCB

= cbs).lr_find()